Update from the Field – SQL PASS SUMMIT 2012

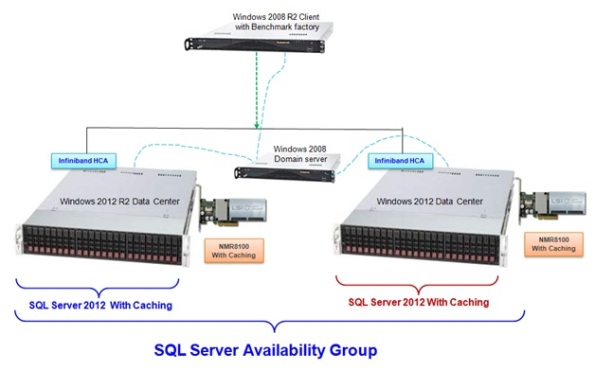

This week the Professional Association for SQL Server (PASS) has its annual conference in Seattle. It is always a good show to get updates on new developments for SQL Server and to get a feeling about the adoption rate of various features. At this show LSI is demonstrating a benchmark that is running on a SQL Server 2012 AlwaysOn Availability Group (a good article discussing this feature is available here). In the demo we are showing a database in an AlwaysOn Availability Group Cluster (in synchronous mode) running on local storage. This is a type of database clustering solution that uses log shipping between databases to create a High Availability (HA) solution. Both sides of the cluster having a complete copy of the database rather than a single copy on shared fault tolerant storage. LSI also has a second setup with a database running on SAN storage – the traditional storage for a clustered architecture. The AlwaysOn Availability Group Cluster is running at almost three times the transaction rate of the SAN system and at a fraction of the response time.

There is an important different between the two configurations. The local storage setup is not using disks alone, but rather Nytro MegaRAID controllers. These are high performance RAID controllers with flash integrated as a large cache to accelerate the disks. Mellanox was also kind enough to let us borrow a couple of Infiniband HCAs for a high performance connection between the clustered databases. With both high performance local storage and high performance networking a better performing HA SQL server solution can be created very cost effectively compared to alternative SAN based architectures. The full setup is illustrated below:

Prior to the AlwaysOn Availability Groups, SQL Server had another technology called Database Mirroring that used log shipping to create a HA database using local storage. It worked, but the applications had to be aware of the backup server and the management of the HA solution was very different than the management of a traditional SQL HA solution using Microsoft failover clustering. This different management was targeted at database administrators more than server or storage administrations. Database mirroring was a solution that worked technically, but because of its different management, it introduced serious risk of human error. The administrators tasked with availability and database administration had to use the same tool rather than separate ones and the application team had to make sure that the connections to the database were setup properly for everything to work.

An enterprise application that requires HA typically supports very important applications where the cost of downtime dwarfs all other considerations and management risks are taken very seriously. One of the huge advancements with Availability Groups is the management is done through the Microsoft cluster manager and applications no longer need to be aware of whether the database is clustered or not. This change has made a huge difference to the perception of the solution and it is great to talk with customers about how they are using Availability Groups & how they plan to. With applications getting better and better at providing robust solutions that can leverage local storage; the future is very bright for high performance local storage solutions.

Flash and Hadoop

Over the last several months I have been running hundreds of jobs on various small scale Hadoop clusters. I have compiled the results, come up with some conclusions, and will have more details posted here. If you have time available, on Tuesday Sept 11, you can get a sneak peek of the testing in a webinar (registration required) on big data and capital markets here.

Solid State Storage and the Mainstream

In 2008 I was on a panel at WinHEC alongside other SSD and disk industry participants. The question came up – When would SSDs become the default option rather than a premium? Most answers came in a form of – there are places where both disks and SSDs make sense. When my opportunity to comment came, I replied simply “five to ten years,” which added a bit of levity to the panel where the question had been skirted.

Now that several years have passed, I can look back at how the industry has moved forward and I decided that I was ready to update my prediction. In a part of the market there is an unrelenting demand for additional capacity. There are enough applications where capacity beats out the gains in performance, form factor, and power consumption that come with SSDs to give products that optimize price per capacity a bright future. With disk manufacture’s single focus on optimizing cost per capacity, performance optimization has been left to SSDs. In applications that support business processes where performance and capacity are both important, this is a profound shift that has really only begun. SSDs are not going to replace disks wholesale, but using some SSD capacity will become the norm.

One of the aspects of a market that moves from niche to mainstream is that the focus has to shift towards designing for mass markets with a focus on price points and ease-of-use. After looking at the market directions I reached the following conclusion: the biggest winners will be the companies that make it easiest to effective use SSD across the widest range of applications. I conducted a wide survey of the SSD industry, weighed some personal factors, and moved from Texas to Colorado to join LSI in the Accelerated Solutions Division.

Today LSI announced an application acceleration product family that fully embraces the vision of enabling the broadest number of applications to benefit from solid state technology, from DAS to SAN, to 100% flash solutions. The problems that SSDs solve and the way that they solve them (eliminating the time that is spent waiting on disks) has not really changed, but the capacity and entry level prices points have, allowing for much broader adoption.

Using SSDs still requires sophisticated flash management (which is still highly variable from SSD to SSD), data protection from component failures, and integration to use the SSD capacity effectively with existing storage – so there is still plenty of room to add value to the raw flash. In the next wave of flash adoptions, expect to see a much higher attach rate of SSDs to servers. A shift is taking place from trying to explain why SSDs would be justified, to explaining why SSDs are not justified. In this next phase reducing the friction to enable deployment of flash far and wide is the key.

ZFS Tuning for SSDs

Update – Some of the suggestions below have been questioned for a typical ZFS setup. To clarify, these setting should not be implemented on most ZFS installations. The L2ARC is designed to ensure that by default it will never hurt performance. Some of the changes below can have a negative impact on workloads that are not using the L2ARC and accepts the possibility of worse performance in some workloads for better performance with cache friendly workloads. These suggestions are intended for ZFS implementations where the flash cache is one of the main motivating factors for deploying ZFS – think high SSD to disk ratios. In particular, these changes were tested with an Oracle database on ZFS

In 2008 Sun Microsystems announced the availability of a feature in ZFS that could use SSDs as a read or write cache to accelerate ZFS. A good write-up on the implementation of the level 2 adaptive read cache (L2ARC) by a member of the fishworks team is available here. In 2008, flash SSDs were just starting to penetrate the enterprise storage market and this cache was written with many of early flash SSD issues in mind. First, it warms quite slowly, defaulting to a maximum setting of 8 MB/s cache load rate. Second, to avoid being in a write heavy path, it is explicitly set outside of the data eviction path from the ZFS memory cache (ARC). This prevents it from behaving like a traditional level 2 cache and causes it fill more slowly with mainly static data. Finally, the default record size of the file system is rather big (128KB) and the default assumption is that for sequential scans it is better to just read from the disk and skip the SSD cache.

Many of the assumptions about SSD don’t line up with current generation SSD products. An enterprise class SSD can write quickly, has high capacity, higher sequential bandwidth than the disk storage systems, and has a long life span even under a heavy write load. There are a few parameters that can be changed as a best practice when enterprises SSDs are being used for the L2ARC in ZFS:

Record Size

Change the Record Size to a much lower value than 128 KB. The L2ARC fetches the full record on a read and 128 KB IO size to an SSD uses up device bandwidth increases the response time.

This can be set dynamically (for new files) with:

# zfs set recordsize <record size> <filesystem>

l2arc_write_max

Change the l2arc_write_max to a higher value. Most SSDs specify a device life in terms of full device write cycles per day. For instance, say you have 700 GBs of SSDs that support 10 device cycles per day for 5 years. This equates to a max write rate of 7000 GBs/day or 83 MB/s. As the setting is the maximum write rate, I would suggest at least doubling the speced drive max rate. As the L2ARC is a read only cache that can abruptly fail without impacting the file system availability, the risk of too high of a write rate is only that of wearing out the drive ealier. This throttle was put in place as when early SSDs with unsophisticated controllers were the norm. Early SSDs could experience significant performance problems during writes that would limit the performance of the reads the cache was meant to accelerate. Modern enterprise SSDs are orders of magnitude better at handling writes so this is not a major concern.

On Solaris, this parameter is set by adding the following line to the /etc/system file:

set zfs:l2arc_write_max= <maximum bytes per second>

l2arc_noprefetch

Set the l2arc_noprefetch=0. By default this is set to one, skipping the L2ARC for prefetch reads that are used for sequential reading. The idea here is that the disks are good at sequential so just read from them. With PCIe SSDs readily available with multiple GB/s of bandwidth even sequential workloads can get a significant performance boost. Changing this parameter will put the L2ARC in the prefetch read path and can make a big difference for workloads that have a sequential component.

On Solaris, this parameter is set by adding the following line to the /etc/system file:

set zfs:l2arc_noprefetch=0

SSSI Performance Test Specification

I recently joined the governing board of SNIA’s Solid State Storage Initiative (SSSI), an organization designed to promote solid state storage and standards around the technology. One of the biggest points of contention in the SSD space is comparing various devices’ performance claims. The reason this is fairly contentious is that test parameters can have a dramatic impact on the achievable performance. Different flash controllers have been implemented in ways where the amount of over provisioning (see my post on this), the compressibility of the data, and how full the device is can have a dramatic performance impact. To help standardize the testing process and methodology for SSDs the SSSI developed the Performance Test Specification (PTS).

This specification outlines the requirements for a test tool and defines the methodologies to use to test an SSD and the data the needs to be reported. The SSSI is actively working to promote this standard. One of the hurdles to adoption is that many vendors are using their own testing methodology and tools internally and don’t want to modify their processes. There is also hesitation to present numbers that don’t have the best case assumptions that may be compared to the competition under different circumstances. This can make life more difficult for end users, as there is a gap between having a specification and having an easy way to run the test.

I spent some time over the past week making a bash script that uses the Flexible I/O utility (you will need to download this utility to use the script) to implement my reading of the IOPS section of the test. I also made an Excel template to paste the results from the script into. You can use this to select the right measurement window and create an IOPS chart for various block sizes. There are still a few parameters that you need to edit in the script and you will have to do some manual editing of the charts to meet the reporting requirements, but I hope that this will help as a starting point for users that want to get an idea of what the spec does and run tests according to its methodology.

Script (Copy and Paste):

#!/bin/bash

#This Script Runs through part of the SSSI PTS using fio as the test tool for section 7 IOPS

#By Jamon Bowen

#THIS IS PROVIDED AS IS WITH NO WARRANTIES

#THIS SCRIPT OVERWRITES DATA AND CAN CONTRIBUTE TO SSD WEAR OUT

#check to see if right number of parameters.

if [ $# -ne 1 ]

then

echo "Usage: $0 /dev/<device to test>"

exit

fi

#The output from a test run is placed in the ./results folder.

#This folder is recreated after every run.

rm -f results/* > /dev/null

rmdir results > /dev/null

mkdir results

# Section 7 IOPS test

echo "Running on SSSI PTS 1.0 Section 7 - IOPS on device: $1"

echo "Device information:"

fdisk -l $1

#7.1 Purge device

echo

echo "****Prior to running the test, Purge the SSS to be in compliance with PTS 1.0"

#These variables need to be set the test operator choice of SIZE, outstanding IO per thread and number of threads

#To test the full device use fdisk -l to get the device size and update the values below.

SIZE=901939986432

OIO=64;

THREADS=16;

echo

echo "Test range 0 to $SIZE"

echo "OIO/thread = $OIO, Threads = $THREADS"

echo "Test Start time: `date`"

echo

#7.2(b) Workload independent preconditioning

#Write 2x device user capacity with 128 KiB sequential writes.

echo "****Preconditioning"

./fio --name=precondition --filename=$1 --size=$SIZE --iodepth=$OIO --numjobs=1 --bs=128k --ioengine=libaio --invalidate=1 --rw=write --group_reporting -eta never --direct=1 --thread --refill_buffers

echo

echo "****50% complete: `date`"

./fio --name=precondition --filename=$1 --size=$SIZE --iodepth=$OIO --numjobs=1 --bs=128k --ioengine=libaio --invalidate=1 --rw=write --group_reporting -eta never --direct=1 --thread --refill_buffers

echo

echo "****Precondition complete:`date`"

echo

#IOPS test 7.5

echo "****Random IOPS TEST"

echo "================"

echo "Pass, BS, %R, IOPS" >> "results/datapoints.csv"

for PASS in `seq 1 25`;

do

echo "Pass, BS, %R, IOPS, Time"

for i in 512 4096 8192 16384 32768 65536 131072 1048576 ;

do

for j in 0 5 35 50 65 95 100;

do

IOPS=`./fio --name=job --filename=$1 --size=$SIZE --iodepth=$OIO --numjobs=$THREADS --bs=$i --ioengine=libaio --invalidate=1 --rw=randrw --rwmixread=$j --group_reporting --eta never --runtime=60 --direct=1 --norandommap --thread --refill_buffers | grep iops | gawk 'BEGIN{FS = "="}; {print $4}' | gawk '{total = total +$1}; END {print total}'`

echo "$PASS, $i, $j, $IOPS, `date`"

echo "$PASS, $i, $j, $IOPS" >> "results/datapoints.csv"

done

done

done

exit

Is There Room for Solid State Disks in the Hadoop Framework?

The MapReduce framework developed by Google has led to a revolution in computing. This revolution has accelerated as the open source Apache Hadoop framework has become widely deployed. The Hadoop framework includes important elements that leverage hardware layout, new recovery models, and help developers easily write parallel programs. A big part of the framework is a distributed parallel file system (HDFS) that uses local commodity disks to keep the data close to the processing, offers lots of managed capacity, and triple mirrors for redundancy. One of the big efficiencies in Hadoop for “Big Data” style workloads is that it moves the process to the data rather than the data to the process. Knowledge and use of the storage layout is part of the framework that makes it effective.

On the surface, this is not an environment that screams for SSDs due to the focus on effectively leveraging commodity disks. Some of the concepts of the framework can be applied to other areas that impact SSDs. However, one of the major impacts of Hadoop is that it enables software developers without a background in parallel programming to write highly parallel applications that can process large datasets with ease. This aspect of Hadoop is very important and I wanted to see if there was a place for SSDs in production Hadoop installations.

I worked with some partners and ran through a few workloads in the lab to get a better understanding of the storage workload and see where SSDs can fit in. I still have a lot of work to do on this but I am ready to draw a few conclusions. First, I’ll attempt to describe how Hadoop uses storage using a minimum of technical Hadoop terminology.

There is one part of the framework that is critical to understand: All input data is interpreted in a <key, value> form. The framework is very flexible in what these can be – for instance, one can be an array – but this format is required. Some common examples of <key, value> pairs are <word, frequency>, <word, location>, and <sources web address, destination web address>. These example <key, value> pairs are very useful in searching and indexing. More complex implementations can use the output of one MapReduce run as the input to additional runs.

To understand the full framework see the Hadoop tutorial: http://hadoop.apache.org/common/docs/current/mapred_tutorial.html

Here is my simplified overview, highlighting the storage use:

Map: Input data is broken into shards and placed on the local disks of many machines. In the Map phase this data is processed into an intermediate state (the data is “Mapped”) on a cluster of machines, each of which is working only on its shard of the data. Doing this allows the input data in this phase to be fetched from local disks. Limiting what you can do to only local operations on a piece of the data within a local machine allows Hadoop to turn simple functional code into a massively parallel operation. The intermediate data can be bigger or smaller than the input, and it is sorted locally and grouped by key. The intermediate data sorts that are too large to fit in memory take place on local disk (outside of the parallel file system).

Reduce: In this phase the data from all the different map processes are passed over the network to the reduce process that has been assigned to work on a particular key. Many reduce process run in parallel, each on separate keys. Each reduce process runs through all of the values associated with the key and outputs a final value. This output is placed locally in the parallel distributed file system. Since the output of one map-reduce run is often the input to a second one this pre-shards the input data to subsequent map-reduce passes.

Over a run there are a few storage/ data intensive components:

- Streaming the data in from local disk to the local processor.

- Sorting on a temporary space when the intermediate data is too large to sort in memory.

- Moving the smaller chunks of data across the network to be grouped by key for processing.

- Streaming the output data to local disk.

There are two places that SSDs can plug into this framework easily and a third place that SSDs and non-commodity servers can be used to tackle certain problems more effectively.

First, the easy ones:

- Using SSDs for storage within the parallel distributed file system. SSDs can be used to deliver a lower cost per MB/s than disks (this is highly manufacture design dependent), although the SSD has a higher cost per MB. For workloads where the dataset is small and processing time requirements are high, SSDs can be used in place of disks. Right now with most Hadoop problems focusing on large data sets, I don’t expect this to be the normal deployment, but this will grow as the price per MB difference between SSDs and HDDs compresses.

- Using SSDs for the temporary space. The temporary space is used for sorting that is I/O intensive, and it is also accessed randomly as the reduce processes fetch the data for the keys that they need to work with. This in an obvious place for SSDs as it can be much smaller than the disks used for the distributed file system, and bigger than the memory in each node. If the jobs that are running result in lots of disk sorts, using SSDs here makes sense.

The final use case is of the most interest to enterprise SSD suppliers and is based on changing the cluster architecture to impact one of the fundamental limitations of the MapReduce framework (from the Google Labs paper):

“Network bandwidth is a relatively scarce resource in our computing environment. We conserve network bandwidth by taking advantage of the fact that the input data (managed by GFS [8]) is stored on the local disks of the machines that make up our cluster.”

The key here is that “Network Bandwidth” is scarce because the number of nodes in the cluster is large. This inherently limits the network performance due to cost concerns. The CPU resources are actually quite cheap in commodity servers. So this setup works well with many workloads that are CPU intensive but where the intermediate data that needs to be passed around is small. However, this is really an attribute of the cluster setup more than of the Hadoop framework itself. Rather than using commodity machines you can use a more limited number of larger nodes in the cluster and a high performance network. Instead of using lots of 1 CPU machines with GigE networking, you can use quad socket servers with many cores and QDR Infiniband. With 4x the CPU resources and 40x the node to node bandwidth, you now have a cluster that is geared towards jobs that require lots of intermediate data sharing. You also now need the local storage to be much faster to keep the processors busy.

High bandwidth/high capacity enterprise SSDs, particularly PCIe SSDs, fit well into this framework as you want to have higher local storage bandwidth than node-to-node. This is in effect a smaller cluster of “fat” nodes. The processing power in this setup is more expensive, so this isn’t the best setup for CPU intensive jobs.

Hadoop is a powerful framework. One of its key advantages is that it enables highly parallel programs to be written by software engineers without a background in computer science. This is one reason it has grown so popular and why it is important for hardware vendors to see where they fit in. There is a lot of flexibility in the hardware layout, and different cluster configurations make sense for tackling different types of problems.

Where Does Data Management Software Belong?

It is interesting to see how some of the developments in the IT space are governed by intradepartmental realities. I see this most pronounced in the storage team’s perspective versus the rest of the IT team. Storage teams are exceptionally conservative by nature. This makes perfect sense – servers can be rebooted, applications can be reinstalled, hardware can be replaced – but if data is lost there are no easy solutions.

Application teams are aware of the risk of data loss, but are much more concerned with the day-to-day realities of managing an application – providing a valuable service, adding new features, and scaling performance. This difference in focus can lead to very real differences in viewpoints and a bit of mutual distrust between the application and storage teams.

The best example of this difference is where the storage team classifies storage array controllers as hardware solutions, when in reality most are just a predefined server configuration running data management software with disk shelves attached. Although the features provided by the controllers are important (like replication, deduplication, snapshot, file services, backup, etc.) they are inherently just software packages.

More and more of the major enterprise applications are now building in the same feature set traditionally found in the storage domain. With Oracle there is RMAN for backups, ASM for storage management, Data Guard for replication, and the Flash Recovery Area for snapshots. In Microsoft SQL Server there is database mirroring for synchronous or asynchronous replication and database snapshots. If you follow VMware’s updates, it is easy to see they are rapidly folding in more storage features with every release (as an aside, one of the amazing successes of VMware is in making managing software feel like managing hardware). Since solutions at the application level can be aware of the layout of the data, some of these features can be implemented much more efficiently. A prime example is replication, where database level replication tools tie into the transaction logging mechanism and send only the logs rather than blindly replicating all of the data.

The biggest hurdle that I have seen at customer sites looking to leverage these application level storage features is the resistance from the storage team in ceding control, either due to lack of confidence in the application team’s ability to manage data, internal requirements, or turf protection. One of the most surprising reasons I have seen PCIe SSD solutions selected by application architects is to avoid even having these internal discussions!

As the applications that support important business processes continue to grow in their data management sophistication there will be more discussions on where the data management and protection belong. Should they be bundled with the storage media? Bundled within the application? Or should they be purchased as separate software – perhaps as virtual appliances?

Where do you think these services will be located going forward? Let me know below in the comments.

eMLC Part 2: It’s About Price per GB

I have had the chance to meet with several analysts over the past couple of weeks and have raised the position that with eMLC the long awaited price parity of Tier-1 disks and SSDs is virtually upon us. I had a mixed set of reactions, from “nope, not yet” to “sorry if I don’t act surprised, but I agree.” For the skeptics I promised that I would compile some data to back up my claim.

For years the mantra of the SSD vendor was to look at the price per IOPS rather than the price per GB. The Storage Performance Council provides an excellent source of data that facilitates that comparison in an audited forum with their flagship SPC-1 benchmark. The SPC requires quite a bit of additional information to be reported for the result to be accepted, which provides an excellent data source when you want to examine the enterprise storage market. If you bear with me I will walk through a few ways that I look through the data, and I promise that this is not a rehash of the cost per IOPS argument.

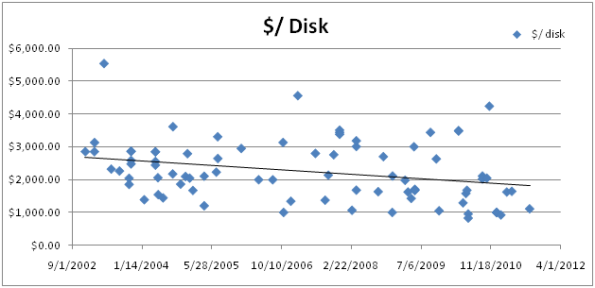

First, if you dig through the reports you can see how many disks are included in each solution as well as the total cost. The chart below is an aggregation of the HDD based SPC-1 submissions showing the reported Total Tested Storage Configuration Price (including three-year maintenance) divided by the number of HDDs reported in the “priced storage configuration components” description. It covers data from 12/1/2002 to 8/25/2011:

Now, let’s take it as a given that SSD can deliver much higher IOPS than an HDD of equivalent capacity, and price per GB is the only advantage disks bring to the table. The historical way to get higher IOPS from HDDs was to use lots of drives and short stroke them. The modern day equivalent is using low capacity, high performance HDDs rather than cheaper high capacity HDDs. With the total cost of enterprise disk at close to $2,000 per HDD, the $/ GB of enterprise SSDs determines the minimum logical capacity of an HDD. Here is an example of various SSD $/GB levels and the associated minimum disk capacity points:

|

Enterprise SSD $/ GB |

Minimum HDD capacity |

|

$ 30 |

67 GB |

|

$ 20 |

100 GB |

|

$ 10 |

200 GB |

|

$7 |

286 GB |

|

$5 |

400 GB |

|

$3 |

667 GB |

|

$1 |

2,000 GB |

To get to the point that 300 GB HDD no longer make sense, the enterprise price per GB just needs to be around $7/GB and 146 GB HDDs are gone at around $14/GB. Keep in mind that this is the price of the SSD capacity before redundancy and overhead to make it comparable to the HDD case.

It’s not fair (or permitted use) to compare audited SPC-1 data with data that has not gone through the same rigorous process, so I won’t make any comparisons here. However, I think that when looking at the trends, it is clear that the low capacity HDDs that are used for Tier-1 one storage are going away sooner rather than later.

About the Storage Performance Council (SPC)

The SPC is a non-profit corporation founded to define, standardize and promote storage benchmarks and to disseminate objective, verifiable storage performance data to the computer industry and its customers. The organization’s strategic objectives are to empower storage vendors to build better products as well as to stimulate the IT community to more rapidly trust and deploy multi-vendor storage technology.

The SPC membership consists of a broad cross-section of the storage industry. A complete SPC membership roster is available at http://www.storageperformance.org/about/roster/.

A complete list of SPC Results is available at http://www.storageperformance.org/results.

SPC, SPC-1, SPC-1 IOPS, SPC-1 Price-Performance, SPC-1 Results, are trademarks or registered trademarks of the Storage Performance Council (SPC)

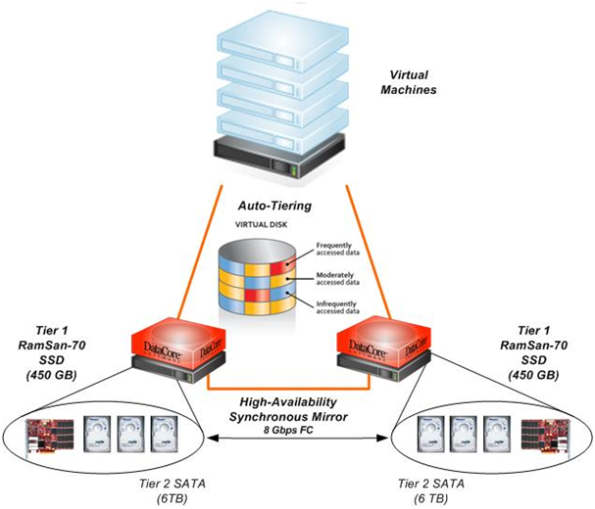

Update from the Field: VMworld 2011

This week in TMS’s booth (booth #258) at VMworld we have a joint demo with our partner Datacore that shows an interesting combination of VMware, SSDs, and storage virtualization. We are using Datacore’s SANsymphony -V software to create an environment with the RamSan-70 as tier 1 storage and SATA disks as tier 2. The SANsymphony-V software handles the tiering, high-availability mirroring, snapshots, replication, and other storage virtualization features

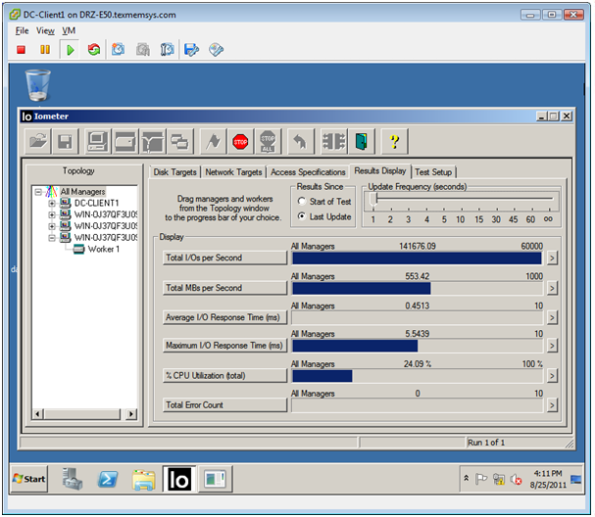

Iometer is running on four virtual machines within a server and handling just north of 140,000 4 KB read IOPS. A screen shot from Iometer on the master manager is show below:

Running 140,000 IOPS is a healthy workload, but the real benefit of this configuration is its simplicity. It uses just two 1U servers and hits all of the requirements for a targeted VMware deployment. Much of the time, RamSans are deployed in support of a key database application where exceptionally high performance shared SSD capacity is the driving requirement. RamSan systems implement a highly parallel hardware design to achieve an extremely high performance level at exceptionally low latency. This is an ideal solution for a critical database environment where the database has all of the tools integrated that are normally “outsourced” to a SAN array (such as clustering, replication, snapshots, backup, etc.). However, in a VMware environment many physical and virtual servers are leveraging the SAN, so pushing the data management to each application is impractical.

Caching vs. Tiering

One of the key use cases of SSDs in VMware environments is automatically accelerating the most accessed data as new VMs are brought online, grow over time, and retire. The benefit of a flexible virtual infrastructure makes seamless automatic access to SSD capacity more important. There are two accepted approaches to properly integrating an SSD in a virtual environment; I’ll call them caching and tiering. Although, similar on the surface, there are some important distinctions.

In a caching approach, the data remains in place (in its primary location) and a cached copy is propagated to SSD. This setup is best suited to heavily accessed read data because write-back caches break all of the data management storage features running on the storage behind it (Woody Hutsell discusses this in more depth in this article). This approach is effective for frequently accessed static data, but it is not ideal for frequently changing data.

In tiering, the actual location of the data moves from one type of persistent storage to another. In the read-only caching case it is possible to create a transparent storage cache layer that is managed outside of the virtualized layer, but when tiering with the SSD, tiering and storage virtualization need to be managed together.

SSDs have solved the boot-storm startup issues that plague many virtual environments, but VMware’s recent license model updates sparked increased interest in other SSD use cases. With VMware moving to a memory-based licensing model there is interest in using SSDs to accelerate VMs with a smaller memory footprint. In a tiering model, if VMDK are created on LUNs that leverages SSDs, the virtual guest will automatically move the internal paging mechanisms within the VM to low latency SSDs. Paging is write-heavy, so the tiering model is important to ensure that the page files are leveraging SSD as they are modified(and that the less active storage doesn’t use the SSD).

We are showing this full setup at our booth (#258) at VMworld. If you are attending I would be happy to show you the setup.

Part 1: eMLC – It’s Not Just Marketing

Until recently, the model for Flash implementation has been to use SLC for the enterprise and MLC for the consumer. MLC solutions traded endurance, performance, and reliability for a lower cost while SLC solutions didn’t. The tradeoff of 10x the endurance for 2x the price led most enterprise applications to adopt SLC.

But there is a shift taking place in the industry as SSD prices start to align with the prices enterprise customers have been paying for tier 1 HDD storage (which is much higher than the cost of consumer drives). If the per-GB pricing is similar, you can add so much more capacity that endurance becomes less of a concern. Rather than only having highly transactional OLTP systems on SSDs, you can move virtually every application using tier 1 HDD storage to SSD. The biggest concern for tier 1 storage has been that the most critical datasets that reside on them cannot risk a full 10x drop in endurance.

Closing the gap: eMLC

At first glance, enterprise MLC (eMLC) sounds like Marketing is trying to pull a fast one. If there was a simple way to make MLC have higher endurance, why bother restricting it from consumer applications? eMLC sports endurance levels of 30,000 write cycles, whereas some of the newest MLC only has 3,000 write cycles (SLC endurance is generally 100,000 write cycles). There is a big reason for this restriction: eMLC makes a tradeoff to enable this endurance – retention.

It’s not commonly understood that although Flash is considered persistent, the data is slowly lost over time. For most Flash chips the retention is around 10 years – longer than most use cases. With eMLC, longer program and erase times are used with different voltage levels than MLC to increase the endurance. These changes reduce the retention to as low as three months for eMLC. This is plenty of retention for an enterprise storage system that can manage the Flash behind the scenes, but it makes eMLC impractical for consumer applications. Imagine if you didn’t get around to copying photos from your camera within a three month window and lost all the pictures!

Today, Texas Memory Systems announced its first eMLC RamSan product: the RamSan-810. This is a major announcement as we have investigated eMLC for some time (I have briefed analysts on eMLC for almost a year; here is a discussion of eMLC from Silverton Consulting, and a recent one from Storage Switzerland). TMS was not the first company to introduce an eMLC product as the RamSan’s extremely high performance backplane and interfaces make endurance concerns more palpable. However, with the latest eMLC chips, we aggregate enough capacity to be comfortable introducing an eMLC product that bears the RamSan name.

10 TB of capacity multiplied by 30,000 write cycles equates to 300 PB of lifetime writes. This amount of total writes is difficult to achieve during the lifetime of a storage device, even at the high bandwidth the RamSan-810 can support. Applications that demand the highest performance and those with more limited capacity requirements will still be served best by an SLC solution, but very high capacity SSD deployments will shift to eMLC. With a density of 10 TB per rack unit, petabyte scale SSD deployments are now a realistic deployment option.

We’re just getting warmed up discussing eMLC. Stay tuned for another post soon on tier 1 disk pricing vs. eMLC.